The initial discovery sessions are intended to catalog the current state of data assets, data platform technologies, and any current data use cases. Once all of this information is captured, the next step is to identify any gaps or challenges and then create a prioritized list of potential future use cases.

Discovery is performed through a series of interviews and documentation reviews. Interviews are performed with each stakeholder group where detailed notes are taken to document relevant findings. Additional interviews may be performed when new information is uncovered in a related discovery session. Interviews are complimented with documentation that covers things such as:

- Architecture

- Roadmaps

- Process flows

- Business Requirements

- Business Plans

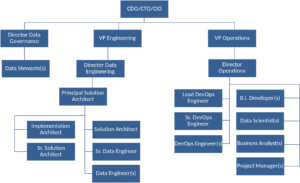

- Org charts

Common Example Questions to Ask During Interviews

Non-IT Questions

- How do you get value out of the data you use?

- What tools do you use to answer difficult questions that come from your BU leadership?

IT Questions

- Describe the architecture of the current data platform.

- What source systems are inscope for the platform? Describe the data domains available, size and any transformation that happen within that source system.

Near the end of Discovery, it is important to catalog and summarize use cases, gaps, and priorities. This summarization will allow for the identification of a Primary Use Case that can drive the development of the platform.

Identify a Primary Use Case That Drives Action and Decision-Making

Your primary use case is the focal point of your data strategy, it’s what’s going to drive the value home. The ideal primary use case should align with your business’s top priority and goals while also having the potential to be completely supercharged by data. There are two main objectives that the primary use case should facilitate achieving:

- Exercise and require implementation with a sufficient number of capabilities of the platform for subsequent use cases to be accelerated.

- Be impactful enough to share with executive leadership to see the value of the platform and provide more support for subsequent approval of use cases.

Questions for Consideration as You Identify Your Primary Use Case:

- How long would it take to solve?

- How likely are you able to deliver?

- Can you tackle it within your existing tech stack?

- Is this solvable with your existing team or do you need to hire more people?

- Are there any regulatory risks involved?

Analytical vs. Operational Use Cases

There is a spectrum that splits use cases, at one end you have Operational Data Products and on the other, you have Analytical Data Products. While these use cases rely on the same underlying data, they have very different requirements. The primary dimension that differentiates these are the impacts they have on generating revenue, producing products, or interacting with clients.

What Are Analytical Data Products?

Analytical data products are most commonly used to inform decision-making and analyze certain business functions. When they are not functioning, there is little impact on customers, revenue, or production. These are the use cases that we typically recommend to prioritize. They typically have a significant impact on the overall business but do not require large upfront investment or support to manage.

What Are Operational Data Products?

Operational data products are used to run day-to-day business operations. Typically, when they go down, there is a large impact on customers, revenue, or production.

Example Use Cases

Analytical – BI

- Basket Analysis – Ad hoc analysis that determines which products customers typically purchase together.

- Product Drill Downs – Help determine product or feature sales by region/customer/distributor.

Operational

- Inventory Forecasting – Estimates inventory levels required in a specified period.

- Real-Time Equipment & Process Monitoring – Monitors the health of manufacturing equipment in real or near real-time to increase efficiency.